Raven, a navigation system aboard the ISS, may soon allow NASA to build an autonomous system capable of servicing its spacecraft and refueling them in-flight.

The current system of space exploration is, from a materials perspective, rather wasteful. Beyond that, many future NASA missions will absolutely require such technology as craft are sent farther and farther out into space, traveling continuously past beyond the reach of NASA engineers.

But there's hope in the form of autonomously rendezvous and dock with another spacecraft.

Current docking techniques require some element of manual input using camera feeds like the one above (from a 2014 resupply craft docking with the ISS). Image courtesy of NASA

However, autonomous rendezvous dockings are—to put it lightly—difficult.

Consider two vehicles traveling 16,000 miles per hour in the darkness of the space. Assume that the servicing vehicle, which carries fuel, is constantly approaching the client vehicle (the craft being "chased") which is running out of fuel. Since 99% of all satellites working in space are not designed for in-flight servicing, there are no markings on the client to make rendezvous easier.

Moreover, these vehicles are usually far from Earth and the communication delays are considerable. This means that ground operators cannot issue quick and accurate commands to prevent collisions and have a smooth dock with the client. As a result, the servicer needs to autonomously track the client and achieve rendezvous on its own.

NASA is working to develop sensors, vision processing algorithms, and high-speed, space-rated avionics so they can track, match speed with, and dock with target vehicles.

Raven technology is NASA’s big step forward in making the autonomous space rendezvous a reality.

How Will Raven Advance Algorithms?

Raven is a technology module designed to test and advance the technologies necessary to service an orbiting spacecraft. It gathers information on sensor data and machine learning algorithms and processes it to provide information to engineers back on Earth.Raven will help scientists develop the estimation algorithms which are required to robotically approach and dock with an orbiting satellite and achieve in-flight service. Raven was Raven was sent to the ISS (International Space Station) at the end of February, reaching its destination on the 23rd. During a two-year mission, will have the chance to observe about 50 trajectories from spacecraft rendezvousing with or departing from the ISS.

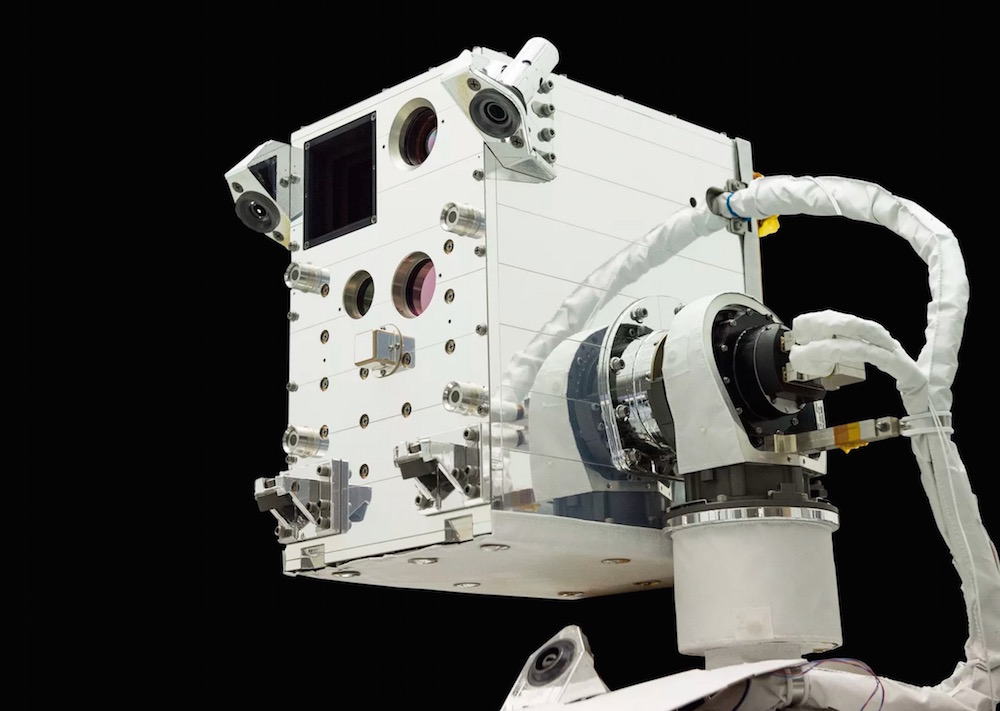

The Raven module. Image courtesy of NASA

Raven will observe all the visiting vehicles from almost 1km away and gather the vehicle trajectory data with a separation distance of approximately 20 meters. These observations will be used to advance the required real-time algorithms. Since the data is obtained from several different vehicles, it will be possible to understand the sensitivity of the developed algorithms to variations in the size and shape of the vehicles. Since at least five rendezvous dockings will be observed for each vehicle, scientists will be able to develop statistics which can predict the performance of the algorithms for a given vehicle size and shape.

Installation and Calibration of the System

After installing Raven on the ISS, the Raven operators will check out all command, telemetry, and data paths. Next, in order to check out the sensors and calibrate the system, ground operators will move the sensors to where they can see the Earth, the ISS, and the already docked vehicles.One day before a visiting vehicle approaches the ISS, the operators will start checking out different modules of Raven such as the processor, software or firmware updates, the two-axis gimbal, and the sensors. Then the sensors will be oriented toward the visiting vehicle and the vision processing algorithms will be conducted as soon as the vehicle is within 1km of the ISS. The system may employ different levels of autonomy to keep the sensors pointing at a vehicle.

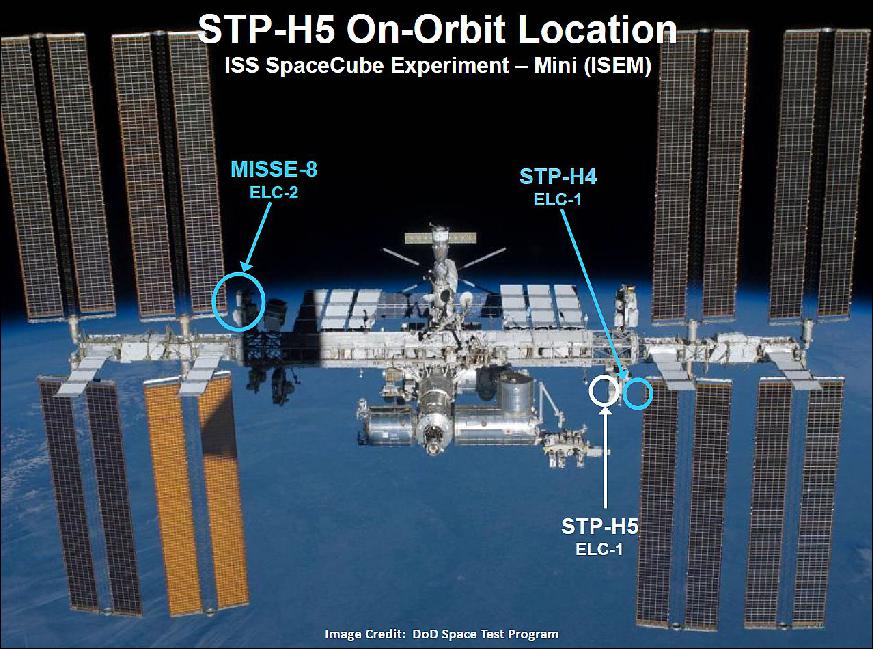

The location of Raven on ISS. Image courtesy of the DoD Space Test Program via eoPortal Directory

What Hardware Will Raven Need?

Raven will test multiple sensors such as a visible camera with a variable field of view lens, a long-wave infrared camera, and a short-wave flash LiDAR. The data from these sensors will be collected at distances within 100 meters and with rates up to 1Hz. Since the sensors will be installed on the station rather than on each visiting vehicle, it will be possible to reuse the hardware for a large number of missions and reduce the costs considerably.In addition to advancing the algorithms and testing the sensors, Raven will also examine if a common hardware suite is sufficient to perform various rendezvous operations. These operations include docking with a cooperative target and landing on a non-uniform asteroid.

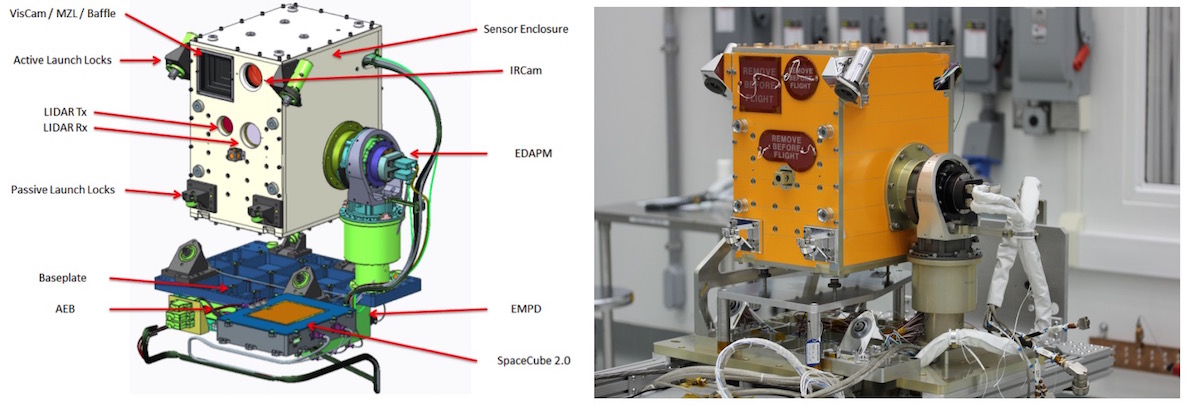

The CAD model of Raven is shown in the following figure. As shown in this figure, Raven has a sensor enclosure which contains all its sensors, such as the optical sensors and the thermal accommodations including heaters, thermostats, and thermistors.

The CAD model illustrating different sensors and subsystems of the Raven (left) and the flight hardware with the sensor enclosure and GSE (grounds support equipment) (right). Image courtesy of NASA.

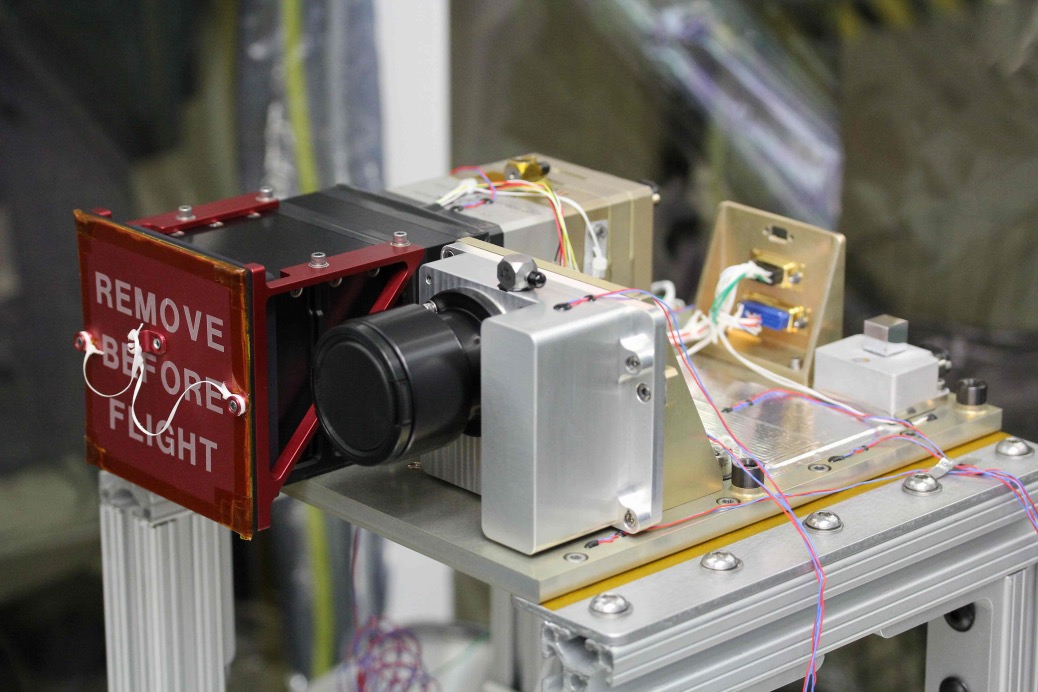

The following figure shows three sensors of Raven: the VisCam, the IRCam, and the Inertial Measurement Unit (IMU).

The IMU contains three MEMS gyroscopes, three MEMS accelerometers, and three inclinometers. Raven utilizes a separate temperature sensor for each of these gyroscopes, accelerometers, and inclinometers, as well. In this way, the system can take temperature variations into account and interpret the readings of other sensors in a more accurate way.

The Raven optical bench. Image courtesy of NASA.

As shown in the CAD model above, the flash LiDAR is mounted to the front panel of the sensor enclosure. You can see the transmit and receive optics of the flash LiDAR in the mode. The flash LiDAR illuminates the objects and analyzes the reflected light to find the distance of the reflective surface. Raven is able to do this at a rate of up to 30Hz.

Moreover, the LiDAR uses an optical switch to toggle between either a 20- or 12-degree transmit FOV. This can considerably increase the signal-to-noise ratio of the LiDAR readings.

A SpaceCube 2.0 Engineering Model is the processing unit of the Raven. It employs three Xilinx Virtex-5 FPGAs where one of these FPGAs acts as the main board controller and the other two are the host to run the application-specific codes which implement the Raven flight software.

Raven's flight science data processor. Image courtesy of NASA

According to Ben Reed, deputy division director for the SSPD at NASA’s Goddard Space Flight Center in Maryland, autonomous rendezvous are crucial for future NASA missions and Raven is maturing this never-before-attempted technology.

For a more detailed explanation of the technology please refer to the NASA technical article on Raven.

No comments:

Post a Comment