Here’s a snapshot of where our display technology is at right now—and a little bit of what is just around the corner.

The virtual and augmented reality immersive experience is heavily influenced by the display technology behind it.

If a display is too heavy, doesn’t have a high enough resolution, requires too much power, or doesn’t provide a sufficient field of view, the illusion isn’t quite as good, or the useful application of the display could be diminished.

This past May, the International Development Corp (IDC) released a report in which it forecasted that $27 billion USD would be spent on VR/AR in 2018, which is a 92% growth compared to 2017. This number is expected to eventually reach $53 billion USD by 2023.

This growth certainly incentivizes the improvement of display technology, whether it is being used for industrial or leisure purposes.

Here’s a snapshot of where our display technology is at right now, and a little bit of what is just around the corner.

Recreating Human Vision: 1443 ppi OLED

Google and LG have been collaborating on R&D efforts to develop a head-mounted display that recreates natural human vision as closely as possible. The specs needed to achieve this include a field of view (FoV) that is 160 degrees (horizontal) by 150 degrees (vertical), and has a per eye resolution of 9600x9000 pixels for 20/20 acuity.

In a paper published in the Journal of the Society for Information Display, the challenges in achieving these specs are discussed. Two of the biggest challenges come from the required pixel pitch to achieve human vision acuity, as well as the refresh rate required.

First, the pixel pitch; this describes the distance between pixel clusters and determines the optimal viewing range for a given resolution. To recreate human vision acuity, this optimal distance would vary across the display. This would add a considerable amount of complexity in the design, and so instead a uniform pixel pitch was calculated at 11.4 µm, which would require a 4.3 display with 2138 ppi for a 160-degree FoV.

For the refresh rate, the line time to refresh a row of pixels would be only 694 µs and require a pixel clock of 14.3 GHz

Achieving these specs would be incredibly difficult and they still wouldn't be able to produce usable results since they would require heavy lenses, complicated circuitry, etc. Therefore, a balance of trade-offs was needed and the end result is a 4.3-inch display, providing a 100 degree (horizontal) by 96 degree (vertical) FoV, 17.6 µm pixel pitch, and 1443 ppi. The display is driven using an n-type LTPS TFT backplane to reduce ghost image artifacts, with the video stream is converted for the display using an FPGA.

The experimental display is reported to be the highest resolution OLED currently developed.

An example rendering from the experimental OLED using foveated rendering. Image courtesy of Journal of the Society for Information Display.

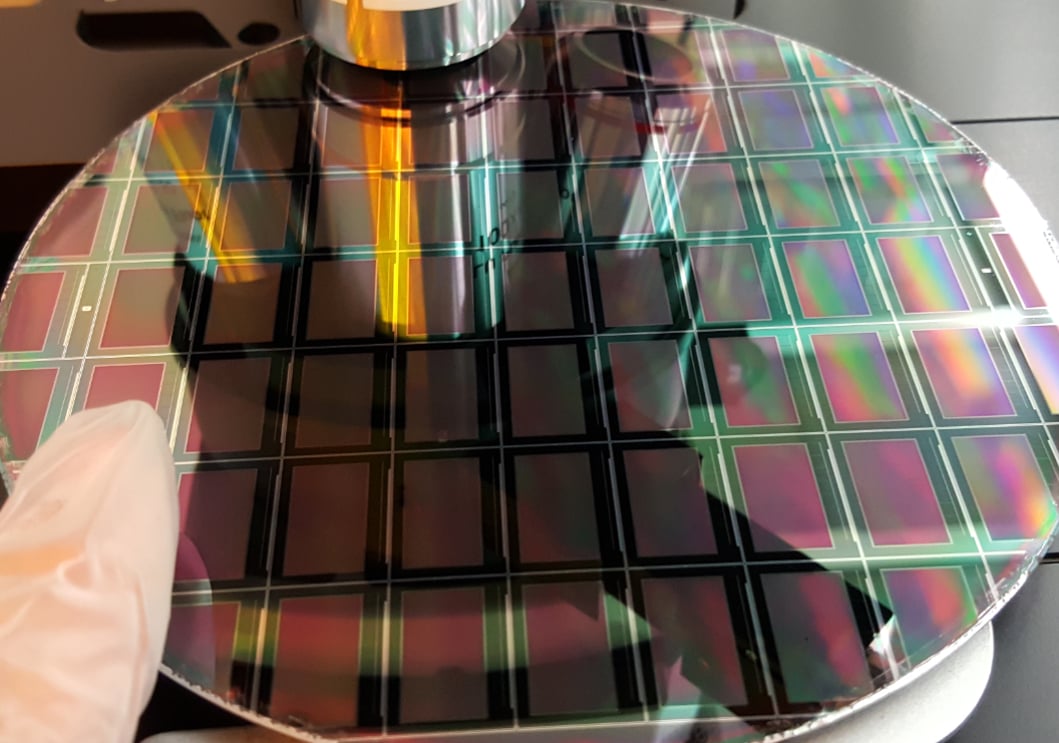

Monolithic MicroLED on GaN-on-Silicon Wafers

Plessey Semiconductors and Jasper Display Corp (JDC) announced a partnership in the development of a monolithic microLED using GaN-on-Silicon wafers and JDC’s eSP70 silicon backplane.

GaN-on-silicon uses gallium nitride in a silicon substrate as a semiconductor. This material has the advantages of being thermally efficient, an excellent optical emitting surface, and a lower efficiency drop in light emission when scaled. It first showed potential in RF and microwave applications but is expected to become more mainstream over time in other applications as the advantages are realized.

GaN-on-Silicon wafer. Image courtesy of Plessey Semiconductors.

These combined properties reduce the power requirements for bright images on LED displays. The eSP70 backplane can provide a 1920x1080 resolution at a pixel pitch of 8µm, and will be paired with Plessey’s microLED on a GaN-on-Silicon wafer.

The companies are specifically targeting VR/AR applications with low power, low cost, and small form factor displays.

Foveated Rendering

Foveated rendering, while maybe not hardware-based in itself, is still an important part of improving display technology for VR/AR. The technique’s name comes from the part of the eye called the fovea centralis—the part of the eye responsible for focused vision, such as when we are reading.

In foveated rendering, eye tracking technology is used in combination with the VR/AR head mounted display to determine where in the image the user is focusing their gaze. Based on that, the system will render parts of the image most immediate in the user’s foveal viewing range more sharply and gradually soften further into the peripheral viewing range.

This helps overcome the hardware challenges for rendering a high-resolution image stream and lowers the workload on the GPU or other specialized hardware.

Foveal rendering was first demonstrated at CES 2016 by SensoMotoric Instruments and Omnivision. Since then, it has been adopted by NVIDIA, Google, LG, with others surely following the lead.

What other AR/VR display technologies have caught your eye recently?

No comments:

Post a Comment