Text-to-speech has been a

frustrating area for developers and users alike (and the butt of many

tech jokes). But Google's recently demonstrated Duplex, their latest AI

text-to-speech engine, shows extraordinary—and somewhat

worrying—results.

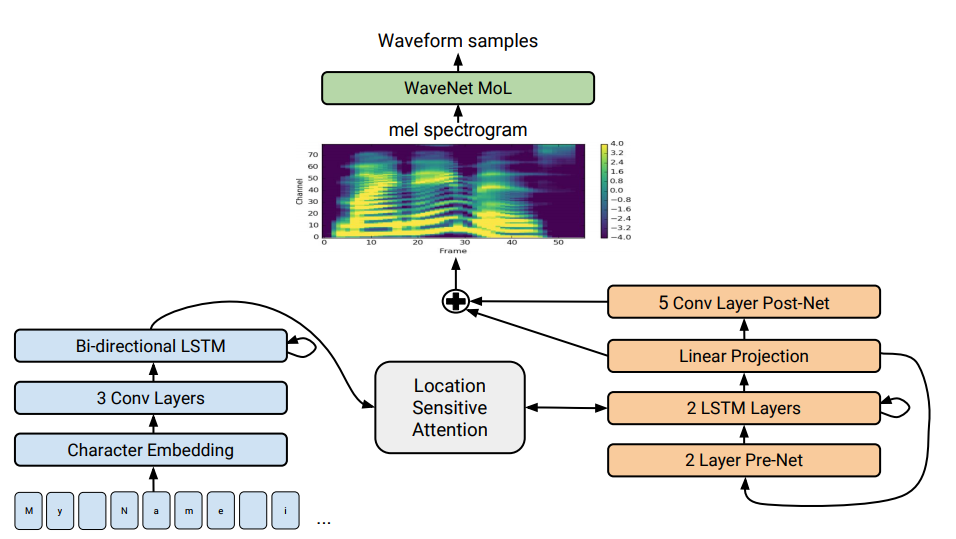

Tacotron 2 has the capability to not only sound natural but it also has the ability to understand punctuation and emphasis (e.g., “HELLO” vs “Hello”), say identically spelled words differently (“Robin will present a present to his friend”), and even speak words that are spelled incorrectly.

Image courtesy of ai.googleblog.com

This engine is incredibly impressive, with the generated speech being indistinguishable from human speech. But Google has continued its work with Tacotron 2 to produce Google Duplex, demonstrated in a Google I/O Keynote by Sundar Pichai on May 8th.

Google Duplex nearly perfectly imitates human speech, but also uses machine learning to likewise understand human speech and generate appropriate responses in a conversation.

Watch the video below to see Google Duplex in action.

In the video, a Google Assistant makes a call to a hair salon, requests a time for an appointment, and adapts to negotiate an appropriate time slot—all without the person on the other end of the phone ever realizing that they were speaking to a machine.

A second example includes a call to make a reservation at a restaurant. Despite the human's accented English, the Assistant is able to request a reservation, navigate a couple of misunderstandings, understand that reservations aren't necessary for parties of fewer than five people, and then ask how long the wait time is without a reservation, unguided by a human. Again, the person on the other end of the line wasn't aware that they were speaking to a machine.

Google Duplex represents an incredible stride in understanding nuances in human speech. Most (if not all) spoken command systems for computers require an announcement followed by a carefully constructed sentence. (For example, “Google, open, documents” or “Cortana, tell, me, a, joke”.)

Google Duplex, on the other hand, can understand the context of a sentence, as with the restaurant reservation example from the video.

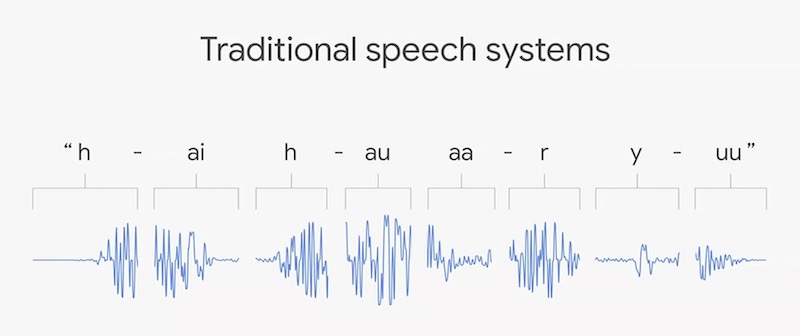

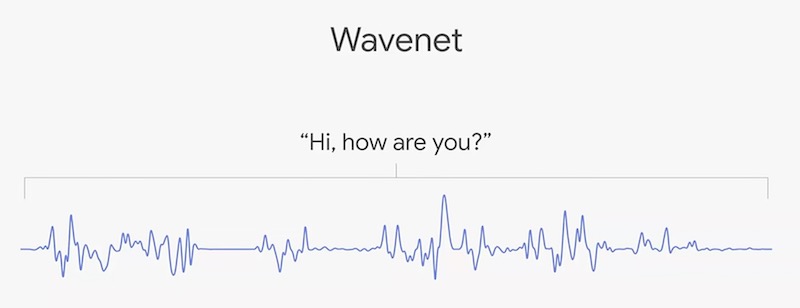

Google's WaveNet voice modeling is one of the secrets to Duplex's success, generating more natural human speech than previous text-to-voice systems.

Visual representation of WaveNet's more holistic speech system approach. Screenshots courtesy of Google

Combining Tacotron 2 and WaveNet are both pivotal to Google Duplex's success, but such complex systems need a lot of processing power. That's where Tensor Processing Units come in.

Scaling Computational Architecture

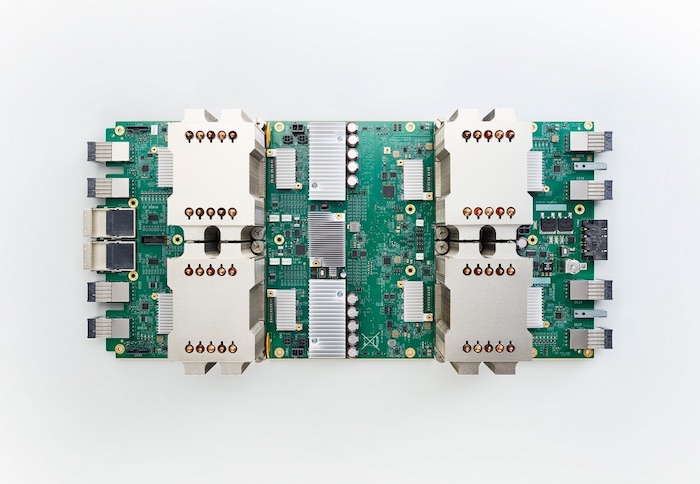

Google Duplex's extreme processing needs require Google's TPUs or tensor processing units. TPUs were developed by Google specifically to accelerate AI development, making them some of the most ambitious and specialized ASICs to date.Obviously, this kind of power is many, many years away from being locally processed on a device, so Google has focused on making TPUs available for interactions with (and de facto training from) the public via their Google Cloud platform. Hence, the second generation of these chips, formally called TPU 2.0, are also known as "Cloud TPUs".

A TPU 2.0 or "Cloud TPU". Image courtesy of Google.

These TPUs were arranged into "pods" of 64 individual chips. These pods function as supercomputers, providing over 10 petaflops to dedicate to machine learning.

The TPU "pods". Image courtesy of Google

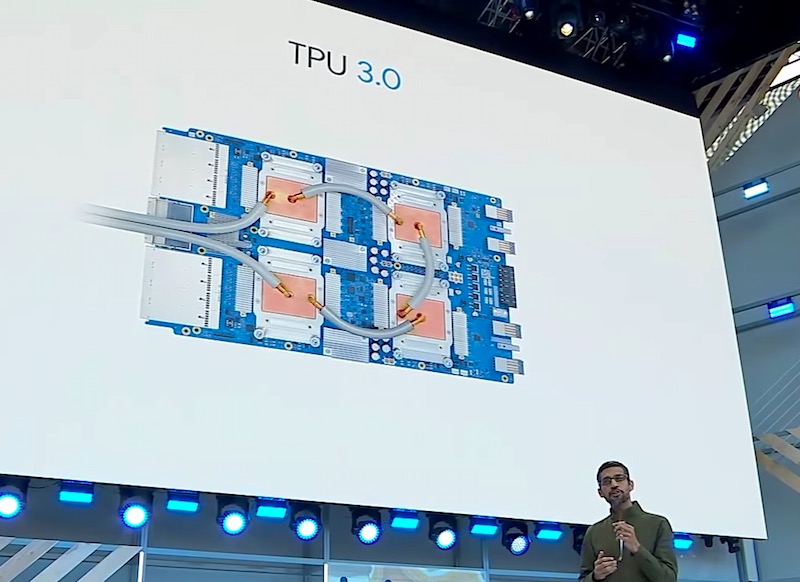

Now, at Google I/O, Pichai also has announced the next generation of these chips, TPU 3.0. This new design still functions in pods, but—for the first time—they now require liquid cooling in their data centers.

TPU 3.0, unveiled at Google I/O. Screenshot courtesy of Google.

Each TPU 3.0 chip is 8x more powerful than the 2.0 version, processing "well over 100 petaflops" of information, according to Pichai. This is the beating heart of Google's attempts to scale their computational architecture to support ever-more ambitious machine learning and AI breakthroughs.

The Ethical Pickle

For all of its technical magnificence, Google Duplex's debut brings up some discomfort along with wonder.What's creepy about Google Duplex? Part of the issue seems to be that the goal of the demonstration video is to deceive a listener. Duplex even inserts human phrases such as “aaah” and “hmmm” into conversation to support natural speech rhythms and emulate common responses.

This moment from the hair appointment call demonstration got a laugh from the audience at Google I/O, likely because it sounded so surprisingly human.

This presents a bit of an ethical pickle in terms of AI as we're presented with the most visceral version of the Turing test so far (though it's worth noting that these examples did not technically pass the Turing test, which would require a longer amount of time for an evaluator to assess the responses).

Would a human behave differently if they knew they were interacting with a non-human? Is it problematic that a company can so easily imitate human speech? Should companies like Google let people know when they're interacting with a non-human?

One of the major concerns about such as system is that the voice of Duplex is learned from an individual. That is, Google's text-to-speech has traditionally been developed by learning from and reproducing samples from a human. In fact, one of the six new Assistant voices available will be singer John Legend, who recorded many speech samples so that Google Assistant will be able to reply in his voice.

This means that, in theory, Google Duplex could mimic anyone’s voice if given enough information. It's easy to think of a world where it would not be difficult for Duplex to bypass voice recognition security such as those used in telephone banking.

Duplex could take this further and be capable of impersonating a human. Imagine the case where Duplex is used to harass someone on the phone using another’s voice in an attempt at intimidation. Imagine a situation in which a public figure's voice was borrowed for unsavory or illegal purposes. The interactions in question would appear to be genuine, the spoken words would sound human, and the flow of the language would be natural.

While Duplex is clearly intended for consumer use—from understanding commands more effectively to taking on the task of basic phone conversations—it also has myriad other uses that we'll doubtlessly discover in the coming years.

Regardless of its applications, the future of text-to-speech is suddenly here. And we may not even realize we're interacting with it.

Would you be creeped out to learn you'd just conversed with an AI? Or would you think it's fascinating? Share your thoughts in the comments below.

No comments:

Post a Comment